Wednesday, March 29, 2023

Monday, March 27, 2023

Dear developer, how will you know when you're finished?

That thing you're working on:

- How are you measuring progress?

- Are you making progress?

- Do others depend on what you're doing and need to know what you'll produce and when it will be finished?

- How will others test it when you've finished?

- How do you know you are going in the right direction?

- How will you know when it's done?

You should probably work this out before you start!

A few years ago, I was invited to give a talk at university. I was asked to talk about how to think about building software. Very vague, but I interpreted it as a reason to talk about the things I think about before starting to code.

Among the things I mentioned was the Definition of Done. (The best thing Scrum has given us.)

The students had never thought about this before.

The lecturers/tutors/staff had never thought of this before.

If you're not familiar, the idea is to specify/document how you'll know that you're finished. What are the things it needs to do? When they're all done, you've finished.

They added it as something they teach as part of the curriculum!

Without knowing when you're done, it's hard to know when to stop.

Without knowing when you're done, you risk stopping too soon or not stopping soon enough.

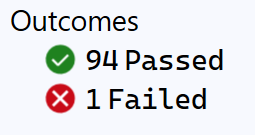

I like causing tests to fail

I like it when tests start failing.

Not in a malicious and wanting to break things way.

I like it when I make a change to some code and then tests fail.

This could seem counterintuitive, and it surprised me when I noticed it.

If an automated test starts failing, that's a good thing. It's doing what it's meant to.

I'd found something that didn't work.

I wrote a test.

I changed the code to make the test pass.

Ran all the tests.

Saw that some previously passing tests now failed.

I want to know this as quickly as possible.

If I know something is broken, I can fix it.

The sooner I find this out, the better, as it saves time and money to find and fix the issue.

Compare the time, money, and effort involved in fixing something while working on it and fixing something once it's in production.

Obviously, I don't want to be breaking things all the time, but when I do, I want to know about it ASAP!

Sunday, March 26, 2023

Aspects of "good code" (not just XAML)

I previously asked: what good code looks like?

Here's an incomplete list.

It should be:

- Easy to read

- Easy to write

- Clear/unambiguous

- Succinct (that is, not unnecessarily verbose)

- Consistent

- Easy to understand

- Easy to spot mistakes

- Easy to maintain/modify

- Have good tooling

In thinking about XAML, let's put a pin in that last point (as we know, XAML tooling is far from brilliant) and focus on the first eight points.

Does this sound like the XAML files you work with?

Would you like it to be?

Have you ever thought about XAML files in this way?

If you haven't ever thought of XAML files in terms of what "good code looks like" (whether that's the above list or your own), is that because "all XAML looks the same, and it isn't great"?

Saturday, March 25, 2023

Is manual testing going to become more important when code is written by AI?

It's part concern over the future of my industry, part curiosity, and part interest in better software testing.

|

| AI generated this image based on the prompt "software testing" |

As the image above shows, it's probably not the time to start panicking about AI taking over and making everyone redundant, but it is something to be thinking about.

If (and it might be a big "if") AI is going to be good at customer support and writing code, it seems there's still a big gap for humans in the software support, maintenance, and improvement process.

In that most software development involves spending time modifying existing code rather than writing it from scratch, I doubt you'll argue with me that adding features and fixing bugs is a major part of what a software developer does.

This still leaves a very important aspect that requires human knowledge of the problem space and code.

Won't we, at least for a long time, still need people to:

- Understand how the reported issue translates into a modification to code.

- Convert business requirements to something that can be turned into code.

- Verify that the code proposed (or directly modified) by AI is correct and does what it should?

Having AI change code because of a possible exception is quite straight-forward. Explaining a logic change in relation to existing code could be very hard without an understanding of the code.

And, of course, we don't want AI modifying code and also verifying that it has done so correctly because it can't do this reliably.

There's also a lot of code that isn't covered by automated tests and/or must be tested by humans/hand.

Testing code seems like a job that won't be threatened by AI any more than other software development-related jobs. It's another tool that testers can use. Sadly I know a lot of testers rely heavily on manual testing, and so will be less inclined to use this tool when they aren't using any of the others already available to them.